Introduction

Imagine a future where Generative AI (GenAI) is not just a tool but a game-changer in every sector. This future is now, with GenAI, especially Large Language Models (LLMs) like GPT, revolutionizing our approach to data. But there’s a catch – the power of GenAI hinges on the quality of data it feeds on. This is where sophisticated data quality platforms become critical. They are the unsung heroes ensuring that the AI revolution is built on solid ground. In this exploration, we dive into why impeccable data quality is not just important but essential in the GenAI era.

The Rise of Generative AI

Large Language Models (LLMs) like GPT have taken the world by storm. These GenAI systems are capable of understanding and generating human-like text, making them incredibly versatile tools for various applications. From content generation and language translation to data analysis and decision support, GenAI is transforming the way businesses operate, and companies that adopt AI as a first citizen are reporting tremendous productivity gains across principals of data analysis, software engineering, marketing and sales to name a few. Vast majority of companies across industries are now investing, or planning on investing, in GenAI as a workforce enabler & optimizer. When applied to a company’s own (non-public) data, GenAI has the potential to unlock previously unknown insights while optimizing the otherwise tedious processes of data interrogation for prescriptive, descriptive and predictive analytics.

However, for LLMs to function at their best, they require high-quality data. Public LLMs like ChatGPT are trained on vast datasets from the internet whereas privately deployed GenAI systems are trained on vast datasets from a company’s historic data, and the quality of this training data significantly impacts their performance. Here’s why superior data quality is non-negotiable in the GenAI domain:

Accuracy and Reliability: LLMs are often used to make important decisions, generate content, or provide insights. Inaccurate or unreliable data can lead to erroneous results, potentially skewing results that may harm businesses and their reputation.

Bias Mitigation: LLMs have been criticized for inheriting biases present in their training data. High-quality data helps reduce biases, ensuring fair and ethical AI applications.

Efficiency: Poor data quality can lead to inefficiencies as LLMs may require more processing to handle noisy or inconsistent data, slowing down operations and increasing costs.

Enhanced Decision-Making: Quality data ensures that LLM-generated insights are more trustworthy, aiding decision-makers in making informed choices.

Why Qualytics?

Qualytics is a data quality platform designed to address these critical concerns and ensure that your data is primed for optimal LLM performance. Here’s why Qualytics stands out as the right solution:

Automated Data Profiling and Rule Inference: Qualytics offers comprehensive automated data profiling and inference of contextual data quality rules derived from a company’s historic data automatically identifying outliers and weeding them out from training datasets. It identifies consistency, uniqueness, precision, accuracy, timeliness, completeness, coverage and volumetrics, ,, ensuring that your data is accurate, precise and complete, which is crucial for LLM training and usage.

Bias Detection and Mitigation: One of the most significant challenges with LLMs is bias. Qualytics employs advanced algorithms to detect and mitigate bias in your data, making LLM outputs fairer and more reliable.

Customizable Data Governance: Qualytics allows you to establish data governance policies tailored to your organization’s specific needs. You can define data quality standards and automate data quality checks, reducing the risk of data-related issues.

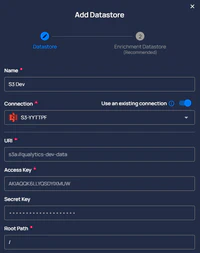

Scalability and Integration: Whether you have a small dataset or vast amounts of data, Qualytics is scalable to meet your needs. It seamlessly integrates with your existing data infrastructure and can handle data from diverse sources.

Real-time Monitoring: To maintain data quality over time, Qualytics provides real-time monitoring and alerts for data quality deviations. This proactive approach ensures that issues are addressed promptly.

Conclusion

In an era where GenAI is becoming a cornerstone of innovation and progress, the significance of impeccable data quality is paramount. Platforms like Qualytics not only fulfill this essential need but also offer distinctive features like on-premise deployment, broad integration capabilities, and scalable solutions. For organizations aiming to capitalize on the transformative power of GenAI, investing in a platform like Qualytics is not just a strategic move; it’s a cornerstone for future-proofing their technological landscape. As we advance into an AI-dominant future, the emphasis on superior data quality will increasingly become the differentiator for success and sustainability in this exciting journey.

BY Eric Simmerman / ON Nov 30, 2023