We’re excited to share that Qualytics now supports deep integration with Databricks Workflow, offering a best-in-class solution for boosting your data quality monitoring and advanced anomaly detection within the Databricks streaming data environment. This partnership is especially crucial for tackling Data Governance and large scale Data Operations head-on with data never leaving the Databricks ecosystem. Going well beyond basic observability, the Qualytics platform’s advanced data quality capabilities being directly applied in real-time to Databricks Workflow and Delta Live tables is a game-changer, ensuring that both inferred and authored, complex rules can be applied at every step of a pipeline while staying fully compliant with regulatory standards and security requirements.

Your Data in Databricks, Powered by Qualytics

For teams navigating the complex waters of data governance, regulatory compliance, and data custody, any data - sensitive or not - leaving the Databricks ecosystem is a big information security concern. This is why this pushdown capability of our internal processes matters. Not only does our profiling and inference enginers work directly within Databricks, but as does our runtime engine - meaning your data never has to leave the Databricks ecosystem to get advanced data quality capabilities. This setup negates the typical concerns for CIOs, CISOs, and CDOs, removing the headache of data custody and transfer risks and keeping everything secure within your Databricks environment. Plus, it’s a smart way to make the most out of your Databricks investment, strengthening your data governance framework and staying on the right side of those ever-important regulatory lines.

Photon Integration Boosts Analysis Speed and Efficiency

The integration significantly optimizes Data Operations by leveraging Databricks’ next-gen Spark engine, Photon, which provides massive performance boosts. Qualytics is architected on open-source Apache Spark for its advanced operations to begin with; hence the support for Photon is seamless - and actually significantly better with advanced optimization and performance. These performance gains don’t only stem from data transfer delays or networking concerns; the Databricks Spark Engine is faster and more optimized than OSS Spark, and we’re all better for it when we can utilize it at scale.

A Leap Ahead in Automating Data Quality Lifecycle

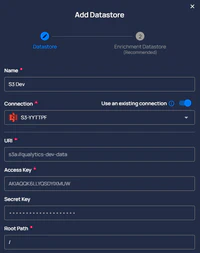

Our integration with Databricks Workflow empowers our clients to craft a complete Data Quality (DQ) lifecycle workflow right within Databricks. Being able to hook up Qualytics via APIs with Databricks Workflow, all data orchestration can be executed within the Databricks ecosystem. With Qualytics positioned as additional data quality intelligence, this setup automates the journey of your data through our platform for detailed anomaly detection. Imagine setting up a pipeline in Databricks Workflow that automatically triggers a pull of raw data from a Delta Live Table to Qualytics through our API, pinpoints anomalies, and populates a target Delta Live table with anomalies and the metadata around what triggered them. Such a deeply integrated pipeline allows us to push data quality more towards the edge and enabling advanced remediation steps to be taken all within your control.

The Qualytics Touch: Enhanced Anomaly Detection

This example is a great way to highlight how such a pipeline may be set up. Starting with source Delta Live tables of Customer, Order and Supplier, we trigger a scan within Qualytics (configured via API), have a condition such that when we find an anomaly, we populate an Enrichment store and kill the workflow on processing any further data. Of course these are all optional steps - a user can choose to have Qualytics as a side-car or as an intervention, or even do interventions based on the severity or tags associated with a rule or anomaly.

Safeguard your Data for the Future

By integrating Qualytics with Databricks, we’re enhancing data governance frameworks, streamlining analysis processes, and providing a holistic management of the data quality lifecycle, all within a secure and reliable ecosystem. This integration is all about empowering our clients to fully leverage their data’s potential. Through automated checks and anomaly detection, we’re not just saving time; we’re also adding a layer of reliability, ensuring that every data-driven decision is informed by data that’s both clean and compliant.

We’re excited for our clients to discover how this integration can transform their data operations, fortify data governance, and elevate investment strategies. With Qualytics and Databricks, you’re equipped to face the future with data quality that’s not only top-notch but also secure, efficient, and compliant, ready to tackle the demands of both today and tomorrow.

Reach out to our team to discuss how our integration with Databricks can safeguard your data for the future.

BY Team Qualytics / ON Mar 01, 2024